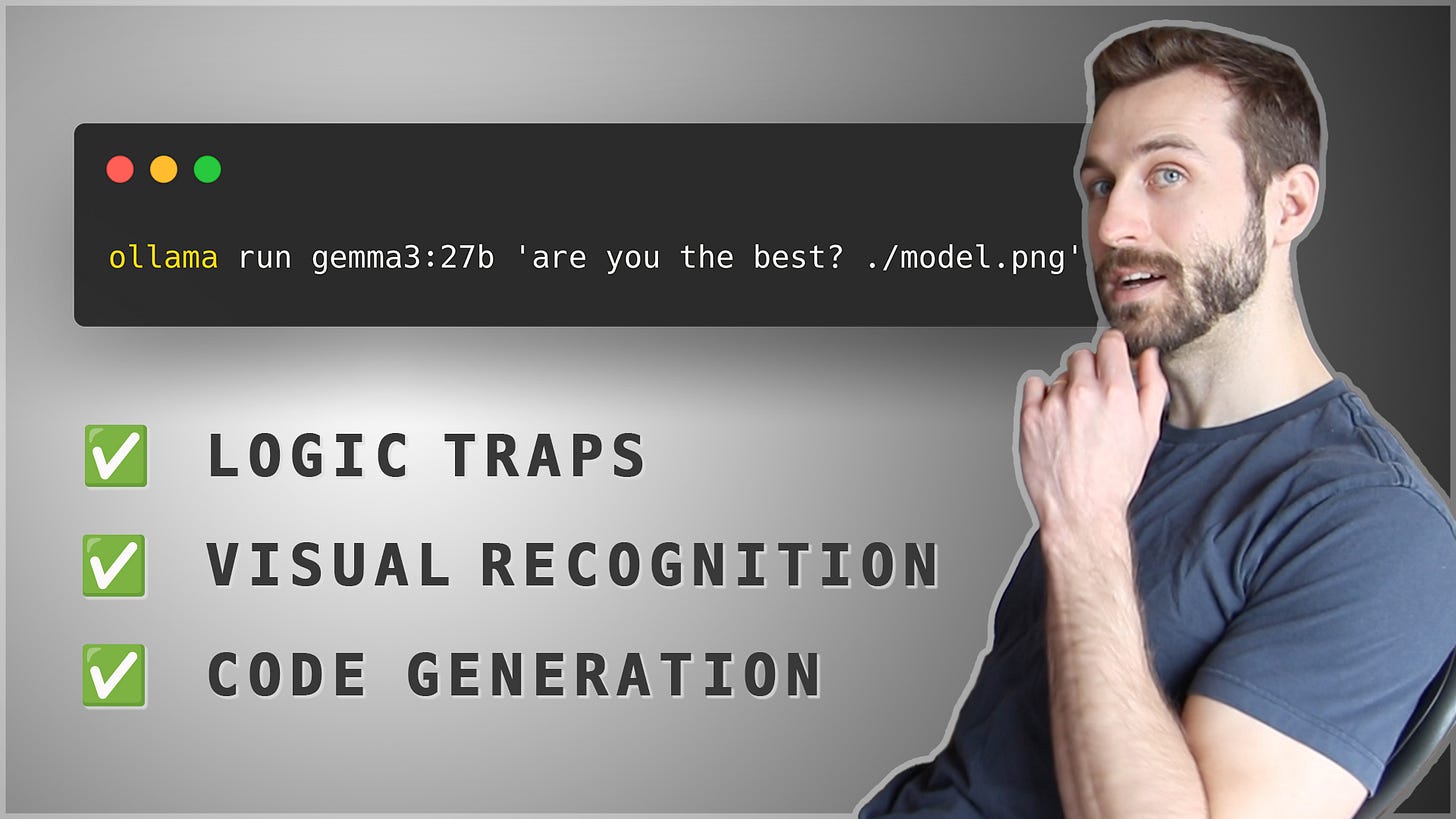

Ultimate Gemma 3 Guide — Testing 1b, 4b, 12b and 27b with Ollama

Gemma 3 is the best model to run locally right now.

Google is crushing the AI game right now, and their open source commitment is as strong as ever.

In this video I compared the 4 quantized model variations of Gemma 3 on my local hardware (Macbook Pro, 48GB RAM).

Check out the video on YouTube

AI Engineer Roadmap 🚀

Thanks to all who took the plunge last month and started in on your AI Engineer Roadmap — https://zazencodes.com/

By the way, you can hop into my Discord server any time and ask me anything!

Topics from this week’s video

Gemma 3 Model Overview

Four versions: 1B, 4B, 12B, and 27B parameters

Released March 2025; top performer on Ollama leaderboards

Strong multilingual capabilities and 128K token context window

3 of 4 models are multimodal (1B is text-only)

Google has released an official technical report

Installation & Setup

Requires Ollama installed and running (

ollama serve)Model sizes:

1B: 800MB

4B: 3.3GB

12B: 8.1GB

27B: 17GB

Memory usage monitored via

nvtopModels can be run in parallel with enough RAM

Logic Trap Testing

Tests on negation, linguistic consistency, spatial reasoning, counting, significant digits, and algebra

Negation trap:

Only 4B model answered correctly

Linguistic A-test (alliteration):

All models succeeded

Spatial reasoning:

Only 4B model answered correctly

Counting letters in “Lollapalooza”:

12B and 27B succeeded

4B got stuck; 1B failed

Significant digits:

12B and 27B correct

1B and 4B failed

Algebra with misleading pattern:

4B, 12B, and 27B succeeded

1B failed

Visual Recognition Tests

Used images of Mayan glyphs, woodblocks, dog at a storefront, Mexico City, Chad GPT, Japanese cards, and more

Key takeaways:

Only multimodal models (4B, 12B, 27B) capable of image analysis

Visual performance:

Often hallucinated or misidentified artwork

27B model performed best but still flawed

Provided incorrect URLs

Context clues (like Spanish text) helped improve output

Geographic test:

4B guessed San Rafael

27B correctly identified Roma Norte

Humor/context recognition weak (e.g., father-in-law at Grand Canyon)

Image Identification Highlights

Mayan glyphs:

Misidentified as medieval art

Spanish-labeled version helped larger models understand context

Chad GPT:

1B hallucinated identities

4B and up recognized as fictional

Bulldog at dog shop:

Cute interpretations

No understanding dogs can’t read

Mexico City neighborhood:

4B close, 27B correct

Hokusai woodblocks:

Partial credit for identifying famous prints

Failed to match correct positions

Japanese playing cards:

All models failed; major hallucinations (e.g., maps, ritual diagrams)

Grand Canyon safety assessment:

All models deemed unsafe

No recognition of visual humor

Code Generation Test

Prompt: create a rotating quote carousel webpage with dark theme

Evaluation across all four models:

1B:

Fastest

Output rough HTML, no file creation

4B:

Better formatting

Still didn’t auto-create file

12B:

Produced sleek design

No embedded quotes

Best visual appeal

27B:

Slowest but most feature-complete

Actually created the file

Functional, but a bit janky

System Performance Observations

Full parallel model testing kept memory within 48GB limit

GPU at 100% utilization, indicating efficient usage

Fans kicked in under 27B model processing

The quickest way to secure people's minds is by demonstrating, as simply as possible, how an action will benefit them.

Robert Greene